publications

publications in reverse chronological order.

2025

- CVPR 2025

ClearSight: Visual Signal Enhancement for Object Hallucination Mitigation in Multimodal Large language ModelsHao Yin, Guangzong Si, and Zilei WangIn Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Jun 2025

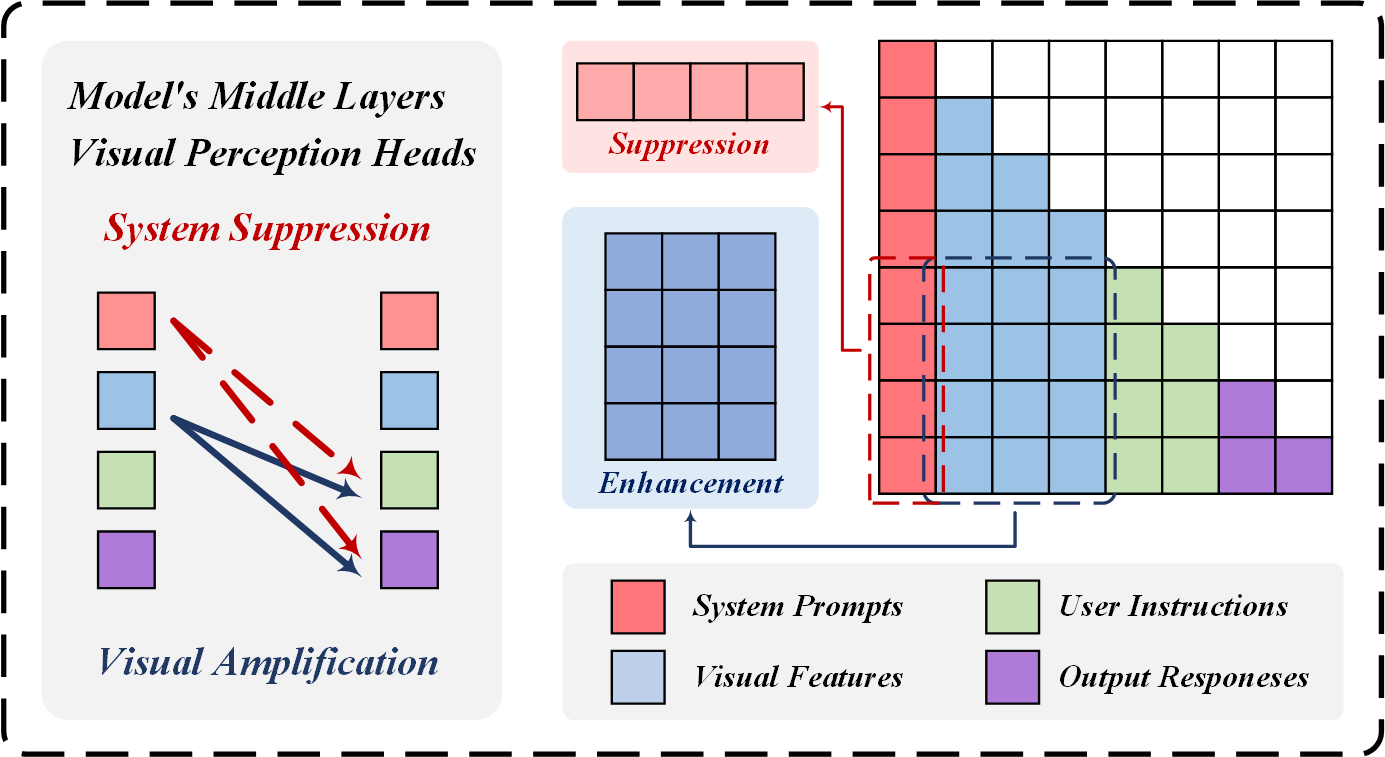

ClearSight: Visual Signal Enhancement for Object Hallucination Mitigation in Multimodal Large language ModelsHao Yin, Guangzong Si, and Zilei WangIn Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Jun 2025Contrastive decoding strategies are widely used to mitigate object hallucinations in multimodal large language models (MLLMs). By reducing over-reliance on language priors, these strategies ensure that generated content remains closely grounded in visual inputs, producing contextually accurate outputs. Since contrastive decoding requires no additional training or external tools, it offers both computational efficiency and versatility, making it highly attractive. However, these methods present two main limitations: (1) bluntly suppressing language priors can compromise coherence and accuracy of generated content, and (2) processing contrastive inputs adds computational load, significantly slowing inference speed. To address these challenges, we propose Visual Amplification Fusion (VAF), a plug-and-play technique that enhances attention to visual signals within the model’s middle layers, where modality fusion predominantly occurs. This approach enables more effective capture of visual features, reducing the model’s bias toward language modality. Experimental results demonstrate that VAF significantly reduces hallucinations across various MLLMs without affecting inference speed, while maintaining coherence and accuracy in generated outputs.

@inproceedings{yin2025clearsightvisualsignalenhancement, title = {ClearSight: Visual Signal Enhancement for Object Hallucination Mitigation in Multimodal Large language Models}, author = {Yin, Hao and Si, Guangzong and Wang, Zilei}, year = {2025}, month = jun, booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition}, } - CVPR 2025

Lifting the Veil on Visual Information Flow in MLLMs: Unlocking Pathways to Faster InferenceHao Yin, Guangzong Si, and Zilei WangIn Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Jun 2025

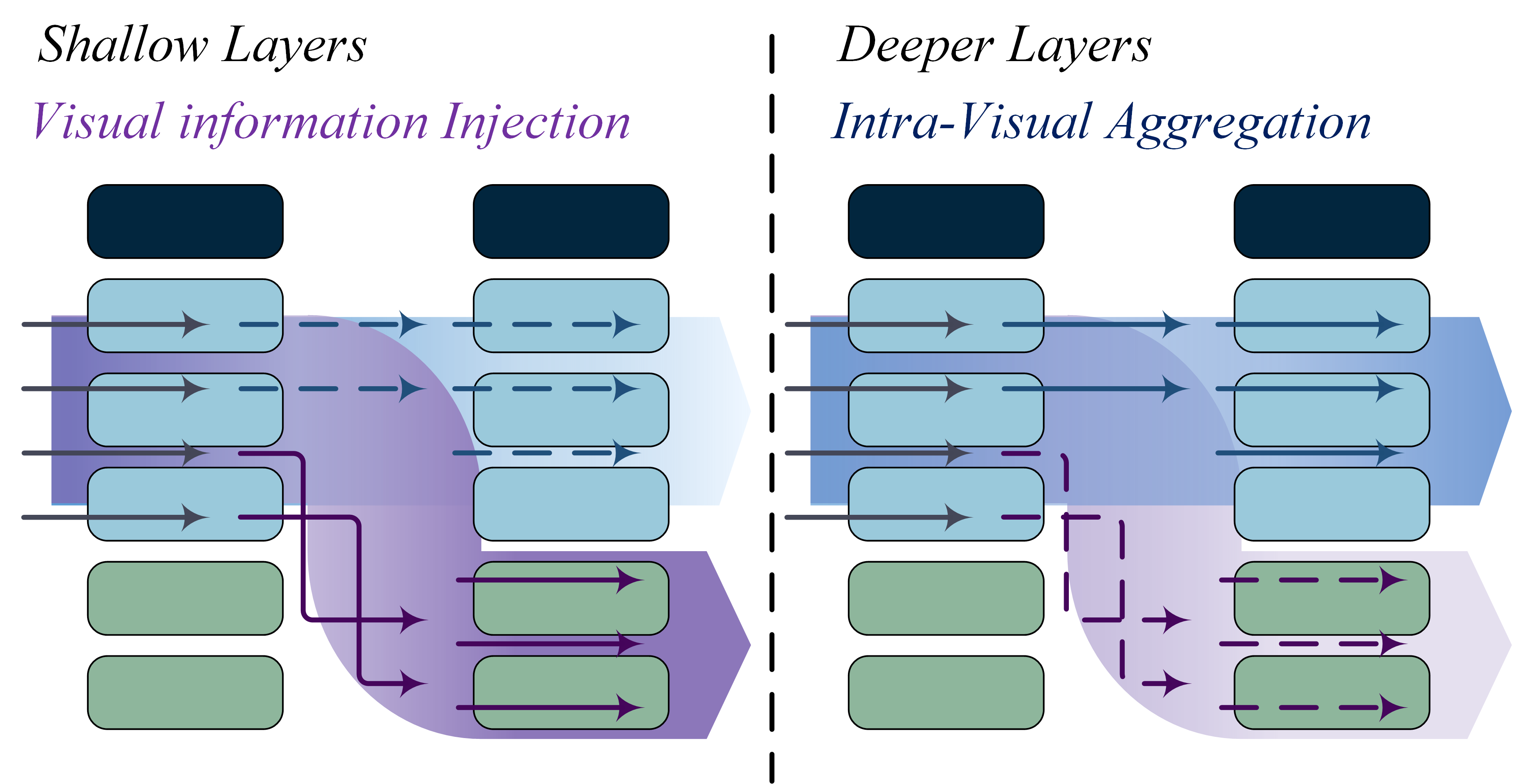

Lifting the Veil on Visual Information Flow in MLLMs: Unlocking Pathways to Faster InferenceHao Yin, Guangzong Si, and Zilei WangIn Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Jun 2025Multimodal large language models (MLLMs) improve performance on vision-language tasks by integrating visual features from pre-trained vision encoders into large language models (LLMs). However, how MLLMs process and utilize visual information remains unclear. In this paper, a shift in the dominant flow of visual information is uncovered: (1) in shallow layers, strong interactions are observed between image tokens and instruction tokens, where most visual information is injected into instruction tokens to form cross-modal semantic representations; (2) in deeper layers, image tokens primarily interact with each other, aggregating the remaining visual information to optimize semantic representations within visual modality. Based on these insights, we propose Hierarchical Modality-Aware Pruning (HiMAP), a plug-and-play inference acceleration method that dynamically prunes image tokens at specific layers, reducing computational costs by approximately 65% without sacrificing performance. Our findings offer a new understanding of visual information processing in MLLMs and provide a state-of-the-art solution for efficient inference.

@inproceedings{yin2025liftingveilvisualinformation, title = {Lifting the Veil on Visual Information Flow in MLLMs: Unlocking Pathways to Faster Inference}, author = {Yin, Hao and Si, Guangzong and Wang, Zilei}, year = {2025}, month = jun, booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition}, } - NeurIPS 2025

The Mirage of Performance Gains: Why Contrastive Decoding Fails to Mitigate Object Hallucinations in MLLMsHao Yin, Guangzong Si, and Zilei WangIn Advances in Neural Information Processing Systems, Sep 2025

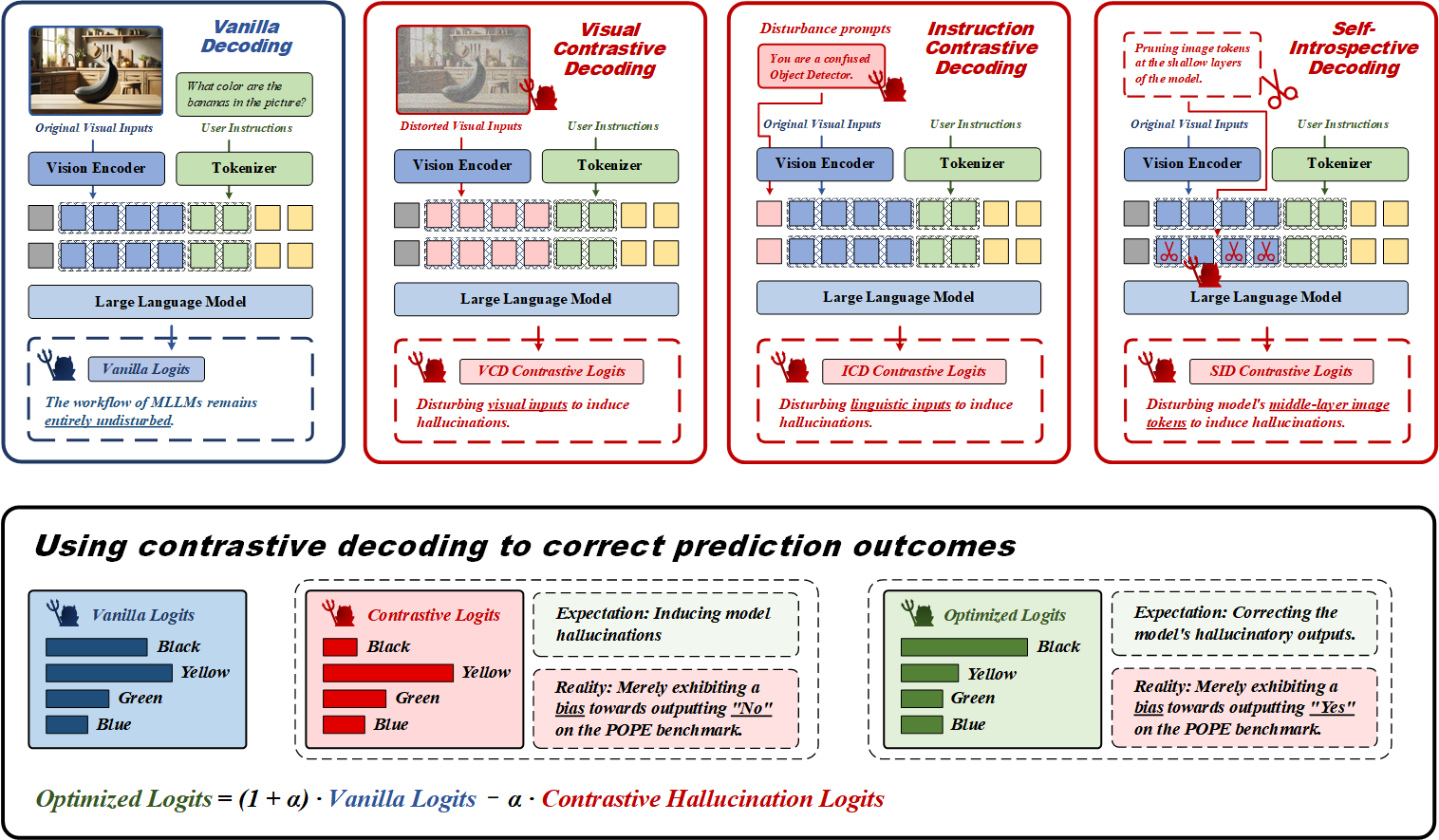

The Mirage of Performance Gains: Why Contrastive Decoding Fails to Mitigate Object Hallucinations in MLLMsHao Yin, Guangzong Si, and Zilei WangIn Advances in Neural Information Processing Systems, Sep 2025Contrastive decoding strategies are widely used to reduce hallucinations in multimodal large language models (MLLMs). These methods work by constructing contrastive samples to induce hallucinations and then suppressing them in the output distribution. However, this paper demonstrates that such approaches fail to effectively mitigate the hallucination problem. The performance improvements observed on POPE Benchmark are largely driven by two misleading factors: (1) crude, unidirectional adjustments to the model’s output distribution and (2) the adaptive plausibility constraint, which reduces the sampling strategy to greedy search. To further illustrate these issues, we introduce a series of spurious improvement methods and evaluate their performance against contrastive decoding techniques. Experimental results reveal that the observed performance gains in contrastive decoding are entirely unrelated to its intended goal of mitigating hallucinations. Our findings challenge common assumptions about the effectiveness of contrastive decoding strategies and pave the way for developing genuinely effective solutions to hallucinations in MLLMs.

@inproceedings{yin2025mirageperformancegainscontrastive, title = {The Mirage of Performance Gains: Why Contrastive Decoding Fails to Mitigate Object Hallucinations in MLLMs}, author = {Yin, Hao and Si, Guangzong and Wang, Zilei}, year = {2025}, month = sep, booktitle = {Advances in Neural Information Processing Systems}, }